2023-5-8 AI Insights: Novel image editing technique, Conditioning pretrained unconditional generative models, and more!

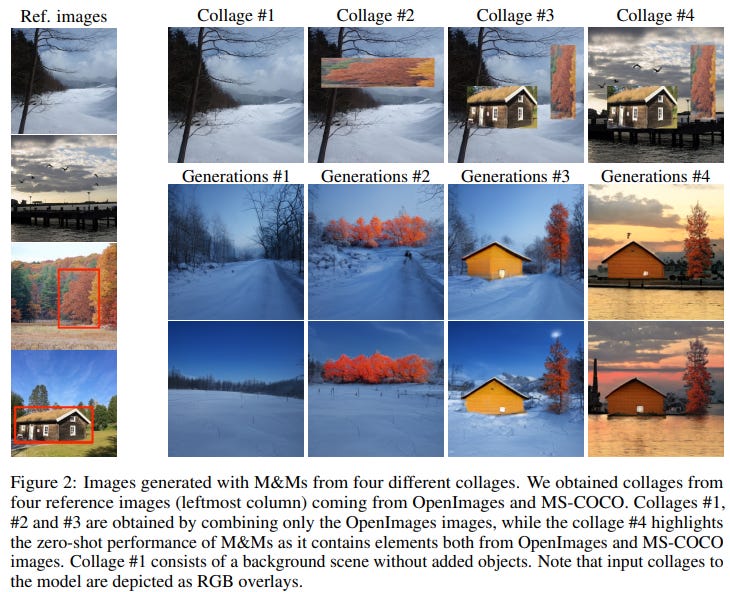

Controllable Image Generation via Collage Representations

Generative AI has made tremendous strides in recent years, with applications ranging from art and design to data augmentation and virtual reality. However, one significant challenge remains: achieving fine-grained controllability in image generation. Text-based diffusion models and layout-based conditional models have made some progress in this area, but they often fall short in providing detailed appearance characteristics. Through an elegant solution, the Mix and Match (M&M) model leverages collage representations to achieve superior scene controllability without compromising image quality.

Problem Statement

Fine-grained generative controllability is essential for tasks like image editing, style transfer, and content generation. Traditional methods, such as text-based diffusion models, require intricate prompt engineering, while layout-based conditional models depend on coarse semantic labels that cannot capture detailed appearance characteristics. Moreover, existing models that attempt to create interpretable and linearly-separable latent spaces for object appearance control face challenges in identifying which latent dimensions correspond to specific features, necessitating semi-supervised training approaches.

Proposed Solution: Mix and Match (M&M) Model

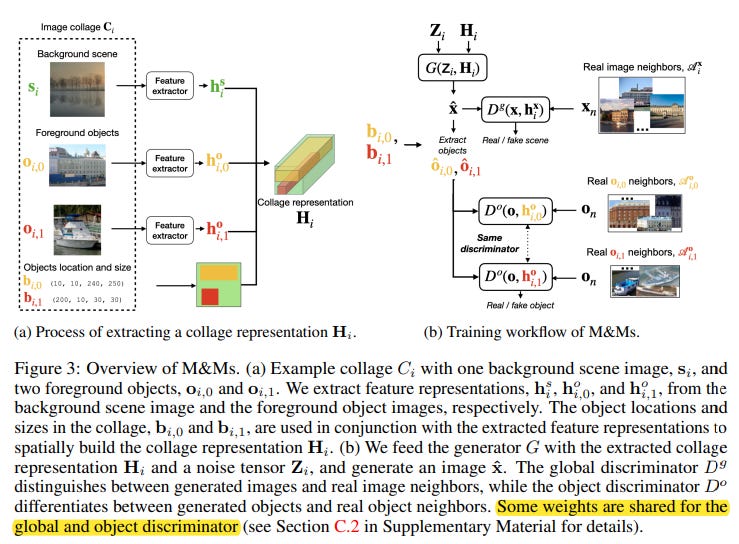

At a high level, this work proposes the Mix and Match (M&M) model, which works by taking an image collage as input, extracting representations of the background image and foreground objects, then spatially arranging these representations to generate high quality, non-deterministic images that are highly similar to the input collage.

Key Components of the M&M Model

The M&M model consists of several key components, including a pre-trained feature extractor, a generator, and two discriminators operating at the image and object levels. By leveraging neighboring instances in feature space, the M&M model can model local densities and blend multiple localized distributions (one per collage element) into coherent images.

Generator: The generator takes in a noise tensor Z_i and a representation tensor H_i. Z_i is composed of scene noise and object noise vectors spatially arranged using collage bounding boxes, while H_i encodes the image collage's background scene and each collage element into feature space, arranged using bounding boxes.

Training: The M&M model employs global and object discriminators to discern between k-NN for image x and images generated conditioned on H_i. These discriminators share some weights and use cosine similarity to compute neighbors.

Explicit Key Contributions:

The M&M model introduces collage-based conditioning as a simple yet effective way to control scene generation.

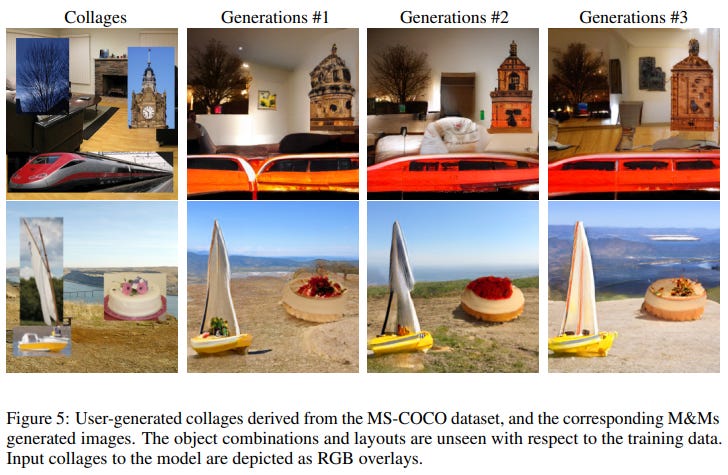

M&M generates coherent images from collages that combine random objects and scenes, offering superior scene controllability without affecting image quality.

Comparison with SOTA

The M&M method was tested for zero-shot transfer on MS-COCO validation images by conditioning it on collages derived from the dataset. When compared with recent text-to-image models in terms of zero-shot FID, M&M achieves competitive results, surpassing DALL-E despite using a smaller model with 131× fewer parameters and being trained on 147× fewer images. The method also demonstrates exceptional controllability, quality, and diversity when combining objects and scenes from different images using user-chosen bounding box coordinates to construct creative collages.

Future Works and Limitations

The current M&M model leverages features from a pre-trained feature extractor and depends on it. Jointly training M&M with the feature extractor could be explored in future work. Additionally, when objects in the collage are cluttered and significantly overlap, the quality of the generated object can quickly degrade. Future research could address this limitation and further improve the controllability and quality of the generated images.

Conclusion

The Mix and Match (M&M) model represents a significant step forward in achieving fine-grained generative controllability in image generation. By utilizing collage representations, the M&M model enables enhanced control over scene generation while maintaining high image quality. This novel approach holds great promise for applications in image editing, style transfer, and content generation, opening new avenues for exploration in the field of Generative AI.

TR0N: Translator Networks for 0-Shot Plug-and-Play Conditional Generation

Problem Statement

One of the main challenges in AI and DL is properly and efficiently leveraging existing large pre-trained models in a modular fashion. The authors of this paper tackle this issue by focusing on conditional generation, where the goal is to generate data samples that satisfy a specific condition.

Related Works

TR0N builds upon a variety of prior works, including conditional models, pushforward models, energy-based models (EBMs), and Langevin dynamics. A key aspect of TR0N is its ability to sample from a distribution defined by the proportionality of p(z|c) to exp(-beta * E(z|c)), where E(z|c) is an energy function that guides the generation of data samples satisfying a given condition.

Key Contributions

The major contribution of this paper is the introduction of TR0N, which takes an unconditional generative model and turns it into a conditional model. It does so by introducing a translator network that better initializes the optimization process, taking a condition as input and producing a corresponding latent variable z with a small energy value E(z|c).

This approach results in an amortized optimization, distributing the costly optimization process across the translator network and making it more efficient. Importantly, TR0N is a zero-shot method, meaning it only requires training a lightweight translator network while keeping the generator and auxiliary models frozen. Additionally, TR0N is model-agnostic, which makes it highly flexible and suitable for use with any generator or auxiliary model.

Personal Thoughts

I believe this paper is significant for several reasons. First, the introduction of TR0N addresses a critical challenge in AI and DL, enabling more efficient use of pre-trained models in a modular fashion. This is particularly important as the field continues to develop increasingly complex models.

Second, the translator network at the heart of TR0N is lightweight, making it accessible to researchers with limited computational resources. The zero-shot nature of the approach further reduces the computational burden by only requiring the training of the translator network.

Finally, the model-agnostic nature of TR0N makes it a versatile and adaptable framework. As newer state-of-the-art models become available, TR0N can be easily updated to leverage these advances.

In conclusion, the development of TR0N represents a significant step forward in the field of AI and DL. Its ability to efficiently and flexibly generate conditional data samples has the potential to unlock new possibilities for researchers and practitioners alike. I eagerly anticipate seeing the impact of TR0N on future research and applications.

Objectives Matter: Understanding the Impact of Self-Supervised Objectives on Vision Transformer Representations

In recent years, self-supervised learning (SSL) has made significant strides in the field of AI and deep learning. One area that has seen extensive research is the impact of different SSL objectives on vision transformer (ViT) representations. In this blog post, we will delve into a paper by Shekhar et al., exploring the differences between Joint-embedding (JE) based learning and reconstruction-based (REC) learning and their impact on ViT representations.

Analyzing Differences and Impacts

The primary focus of the paper is to analyze the differences and impacts of JE-based learning and REC-based learning. JE encourages representations that maximize view invariance between samples from the same image, while REC objectives train models to reconstruct images in pixel space from masked input. The paper highlights that it's still unclear what information is learned and discarded during SSL pre-training.

Related Works

Previous works have also tried to combine JE and REC, like with Masked Siamese networks. Combining objectives lead to improved performance, but the reason for this improvement remains unclear.

Key Contributions

The paper offers several key contributions to the understanding of SSL objectives on ViT representations:

JE representations are more similar to each other than REC representations. Differences arise early in the network and are concentrated in Layer Norm and MHSA layers.

JE models contain more linearly decodable representations because all relevant class discriminative information is available in the final pre-projector layer CLS token. In contrast, REC models lack key invariances and distribute class discriminative information across layers.

Training probes on multiple layers from REC models improves transfer.

Fine-tuning REC models make them more similar to JE models by reorganizing class information into the final layer.

Personal Thoughts

This paper offers valuable insights for early career researchers in AI and deep learning. Understanding the impact of different SSL objectives on ViT representations is crucial for developing better and more efficient deep learning models.

The paper's findings on the distribution of class discriminative information across REC model layers and its concentration in the final layer of JE models are particularly interesting. It opens up possibilities for further research on how SSL objectives affect multimodal representation learning, such as in models like CLIP and Omni-MAE.

In conclusion, the paper by Shekhar et al. serves as a valuable resource for understanding the impact of self-supervised objectives on vision transformer representations. It provides a solid foundation for further research into the development of more efficient and effective deep learning models.